https://www.sqlite.org/backup.html, Imo part of that is just web applications tend to be not very efficient when compared to something like unix cli utilities so to get performance you end up with potentially massive amounts of horizontal scaling, On the other hand, the lack of performance /usually/ buys you higher productivity so you can make product changes faster (you let a GC manage the memory to save time coding but introduce GC overhead).

A kludge---I forget from where---for low traffic sites is one SQLite DB per user. XmlReader - Self-closing element does not fire a EndElement event? This means when the SQLite write call returns, the data will be geographically replicated, and the second disk can be used as a fail over. So there can be a write every few seconds or every minute for every active user, but you only need to do a read a few times at the beginning of a new session. SQLite must serialize writes, which makes a highly parallel write-heavy workload not good for it. Just updated it to an archive link. * I'm worried about my datacenter blowing up: Transactions have to be committed to more than one DB in more than one DC before returning. What are the benefits of setting objects to "Nothing". Writing a custom backend to do tricks with sqlite dlls is extremely satisfying. What your application needs to do is to take whatever regex library it's using, make (through whatever FFI method it's using) a C function that interfaces with it, and register it with SQLite. I wonder if a fork/"new version" could address this. We do this for money after all. I initially had low expectations because it's such a weird use case, but it's been totally reliable. It's just that some of us got obsessed with minimalism lately. We just started enhancing our SQL dialect with new functions which are implemented in C# code. I want want to backup the database at high frequency, but the cost of full backup is not acceptable. This is the only source I know of that gives an overview of closures.c so it's worth the read. - Disk snapshots are incremental, and are stored in Cloud Storage, which is geo replicated across regions (E.g. Thanks for pointing it out! But plugins use so much MySQL-specific features that it was hopeless. But not wanting to get out of your comfort zone is a completely valid stance to take. We use sql.js in production (. An embedded DB with an optional network protocol, but without raft, replication, etc, seems "normal" to me. Horizontal scaling has been employed only as a means of backup instances with a load balancer in front of them. How can I verify that a SQLite db3 file is valid/consistent. In python see: Data validation is a dangerous method to try to prevent SQL injection. bash loop to replace middle of string after a certain character. SQLite is so robust, that I bet most websites could use it without really needing to move onto a client/server RDBMS. The only surefire method is to used parameterized queries, which you should be doing anyways. With the mind-boggling power of the computers today it pays off to be able to make stuff small and still very functional. Think of SQLie as having a weird dialect where `Col1 INTEGER` is spelled `Col1 INTEGER CHECK (typeof(Col1) IN ('integer', 'null'))`. I just want to be able to deploy updates during business hours without downtime. Which is what any RDBMS would have to to anyway. Ive been thinking about this idea, its not officially endorsed by SQLite but in theory it sounds very plausible. SQLite underpins large parts of both iOS and Android, so all of them? In my use case, files are shared between different instances of the application, usually without user intervention, but there's an attack vector to be addressed here.

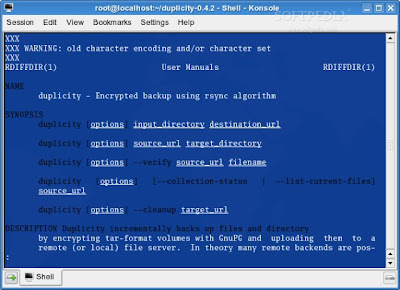

I'd much rather SQLite not waste its time on implementing features they're not good at, leaving that to the tools we already have available for rolling file backups, instead spending their time and effort on offering the best file-based database system they can. in most host languages, it's not hard at all to get whatever you need for datetimes if you are using a host language that has a decent datetime library itself (and, as a bonus, you then don't have to worry about subtle differences between manipulations of datetimes through SQL and manipulations through other mechanisms in the app.). Improve INSERT-per-second performance of SQLite. > There is nothing more convenient than SQLite for analyzing and transforming JSON. It is designed for OLAP instead of OLTP, but it uses Postgres syntax and types! What is your setup like? Again, this is not about some problem with SQLite, but just me not having experience using it this way. Imagine you want to update the lastLogin field for a logged user for example. - A regionally replicated disk will replicate writes synchronously to another zone (another data centre 100km away). A non-technical user could transfer hosts or backup their data by copying their database file like it was a photo or an excel document. If you're unlucky, you can get a corrupted copy. It's another "human" issue, but it's unrelated to org charts.

EDIT: It's actually 1.2MB. A big database id not an issue. This sounds interesting. I agree that Vinchin can contact me by email to promote their products and services. This is acknowledged as a likely mistake, but one that will never be fixed due to backward compatibility: > Flexible typing is considered a feature of SQLite, not a bug. (Heck, even failover is just a file copy initiated "when your health check sees there's a problem"). It's not principally about the concurrent performance, rather it's the administrative tasks. How to store decimal values in SQL Server. I believe Simon, the creator, frequents HN too and is a true SQLite power user :-). Right, and Expensify plausibly solved this by wrapping SQLite to produce a different DB, BedrockDb. That's not the case no, you usually access your database with thread pool. There seems to be such a subset for C, for example, which multiple compilers all interpret the same way, and SQL is a standardized language, too. If your web app is mostly reading and writing single rows, yeah, sqlite is just fine. Isn't there a useful SQL subset which allows you to switch from one database to another without rewriting? It just really varies depending on the type of application. Datetimes really are the achilles heel of SQLite. I don't have any data on this, but I do know that a significant (if not majority) of Wordpress sites now aren't even blogs.

Anyone tested such HA scenario? Thanks for sharing this. I both started a site and helped a family convert an old static site to PicoCMS and really had no complaints. You typically need to offload transactions to a queue in a daemon thread. Does anyone think there is a way to make it work while the database is in use? E.g. Announcing the Stacks Editor Beta release! WAL is turned on and stays on, I think; It seems like you could at least make type-checking an opt-in mode. 1: Actually this is a feature, The basic introduction of the concept of cloud computing, what are the main characteristics of cloud computing? It's just a fast Key-Value store with secondary indexes that's in your browser. Friendly reminder that you shouldn't spend time fine tuning your horizontal autoscaler in k8s before making money. table-name, ALTER TABLE old table name RENAME TO new table name, ALTER TABLE table name ADD COLUMN column name data type qualifier. For the same reason I'm using nodejs; to be able to run the same code anywhere.

You will for your blog series that you mention prominently on your resume that gets you your next Senior Architect gig. Back up the SQLite memory database to disk. Say we use sha256 (which is what new git version uses) so it will be a file (e.g backups/2020-02-22-19-12-00.txt). My SQL knowledge has been a hodgepodge of random stuff for years, so maybe going through your course will help fill in the gaps. To learn more, see our tips on writing great answers. Like, sqlite2 (v1.0, etc.). This strategy will vary by application. Connect and share knowledge within a single location that is structured and easy to search. Developers can deploy and publish new services with minimal effort, and most of the operational complexity is managed by the platform. Obviously, you are going to want to spend a couple minutes thinking about referential integrity.

Sqlite originally comes from Tcl world and thus it is somewhat natural that it follows Tcl's stringly-typed object model. The first two letters are used as directory name so we dont have too many files in a directory. The web would be a better place if a tool as powerful as SQLite was available by default on billions of devices. A lot of downtime occurs because of overly complicated systems so running a single process on a single server can give you relatively high uptime. To me this is obviating a lot of the advantage of SQLite over any other RDBMS. Just backup the file, do whatever, then overwrite the file. I would use SQLite on a single-machine web server. Good architecures address the requirements of the systems being developed. But if there's substantial and complex logic involved, it has its limits. I should revisit this policy now that you can run a truly huge site off a 1U slot (I work for an alexa top10k, and our compute would fit comfortably in 1U); computers are so fast that vertical scaling is probably a viable option. You can even use the hashes file as a way to sync/restore SQLite file. But that is really it. I do also sometimes make use of gron[0] since it integrates well with standard Unix tools. sqlite, How to get Database Name from Connection String using SqlConnectionStringBuilder.

If it's not transactions, concurrent reading, backups, or administrative tasks then what's the issue you run into? If a write was occurring, having a simultaneous request wait 100 milliseconds was no big deal. In my opinion practicality and prior experience often beats what is strictly necessary or "best". I wish SQLite had a PostgreSQL compatibility layer. It only became a problem when I got tired of ops and wanted to put it on Heroku at which time I had to migrate to Postgres. Many Product Manager I talk to wanted me to build something that is as flexible as possible and solves all problems for everyone, everywhere. SQLite has a keyword for regular expression matching, but it has no implementation for it. There's dqlite & rqlite for providing HA over SQLite. Especially since most of these sites are also running on $3/mo shared hosts, switching to SQLite would be a substantial improvement. SQLite doesn't really enforce column types[0], the choice is really puzzling to me. Thanks for pointing it out :). We have a pretty active Slack if you need help getting up and running. Can you provide some examples of frameworks with such pattern? Services like Fargate or Cloud Run, or managed Kubernetes, can do a good job of this. Most languages and web frameworks don't have SQLite drivers out of box (or have extremely bad ones). I've used SQLite a bit, but not enough to say I know where you run into performance issues. CodeIgniter - Call method inside a model? SQLite isn't really designed to have 4-5 network connected apps using it as a shared data store. I've always been surprised WordPress didn't go with SQLite though - it'd have made deployment so much easier for 99% of users running a small, simple blog. This is because opening a SQLite database file makes the SQLite library execute any arbitrary code that may be stored in that file. The database file is set read only at os level (chmod a-w) and database write attempts give an error. It's more accurate to phrase point 1. as SQLite column constraints are opt-in. If you are in WAL mode, you can have unlimited readers as one writer is writing.

jq really needs a repl. Otherwise, I do not see how the attack vector discussed in the video could be "fixed". This approach looks interesting. It's truly a shame that Web SQL was opposed by Mozilla. But I could read from multiple nodes, but for some reason the delete was failing (could not delete a database). I know you put "problem" in quotes, but in case you haven't seen it, this document was posted here a little while ago: That's interesting! It supports multiple readers, and writes are atomic - no reader should ever get corrupt data just because there is a write happening in parallel. Less issues with size limits and string serialisation as you can chuck Blob instances straight into it. Other processes or commands on the system can see partial state. This applies to both the rollback journal & the WAL modes. Find centralized, trusted content and collaborate around the technologies you use most. The documentation does not mention it (and also doesn't warn of the security concern around enabling them). Thats amazing! When adding a new disk to RAID 1, why does it sync unused space? You can put a simple lock statement around a SQLiteConnection instance to achieve the same effect with 100% reliability, but with dramatically lower latency than what hosted SQL offerings can provide. But don't make the mistake that it doesn't matter. I think that the absence of 'high availability' is not an issue for small websites or web apps. SQLite itself does not have those features, but at the disk level you can get those features: > "No, the issue is it doesn't have high availability features: failover, snapshots, concurrent backups, etc.". It's less about performance than about resilience IMO. Application-defined functions are very useful. These days however they could, at least, do a nice wizard to ask "do you want to run a blog only?" I typically see read transactions with multiple queries around ~50s. YOU CAN ENJOY A 60-DAYS FULL-FEATURED FREE TRIAL ! Clean install.. copy the folder, delete the database. While no transaction is open, rsync of the database will be OK. Why does KLM offer this specific combination of flights (GRU -> AMS -> POZ) just on one day when there's a time change? I've built a complex CRM that handles 2.1 million USD in transactions every year. Looking at the archtecture page [0] on that same website the second heading is "Embedding". Your database will get corrupted. That number will obviously depend on the read/write ratio of any given website; but it's hard to imagine any website where [EDIT the number maximum number of concurrent users] is actually "1". Remember, the person I was replying to claimed SQLite was "not useable outside of the model where you have a single user". Eventually the app was able to withstand up to 9000 requests per second, but none of that was an achievement of SQLite we just evaded database, Django and Python altogether on majority of requests. analyzing your GitHub or Twitter use, say) using SQLite and Datasette. Still, for the cloud I started preferring going for Rust (where applicable!) Asking for help, clarification, or responding to other answers. Having a mid-range AMD EPYC server can serve most businesses out there without ever having more than 40% CPU usage. You can achieve 99.95% uptime with 4h of downtime a year. The article addresses this. Europe and US). A single file contains your entire database. Weren't all of these issues quickly fixed by the developers after being reported? While we are on this topic, the most widespread OS in the world, Android, also has extremely low-quality SQLite drivers despite shipping SQLite as default database for many years. SQLite might let you get away with inserting data which PG refuses to handle. That requires a level of configuration and control that is contrary to the mission of SQLite to be embedded. And for many, that will be in the thousands or hundreds of thousands. While a transaction is open, rsync of the database will result in corruption. Because of that, I'm more likely to reach for / recommend PostgreSQL instead. Its the generate everything in every request crowd that needs an HA solution and. They don't, and shouldn't, add that kind of functionality.

I'd be interested in hearing more about this design. How can I use parentheses when there are math parentheses inside?

Nobody said you have to use a single database/file. Exactly what Bloomberg did with Comdb2: https://github.com/bloomberg/comdb2.

Looking at the load of our servers I can comfortably put all our software on my gaming machine (which is mid-range!) If you're lucky, you just get some wonky data. Connections get promoted to a write lock when you do a write DDL or if you begin an IMMEDIATE transaction. I meant the "copy a few pages then sleep for some time to release the database lock" part. Is there any criminal implication of falsifying documents demanded by a private party? > Why do you say it works for "small" websites but presumably not large ones? > Dqlite is a fast, embedded, persistent SQL database with Raft consensus that is perfect for fault-tolerant IoT and Edge devices. As Noah have pointed, it is dangerous. With the latest changes to SQLite, we don't even have to do this anymore as we can return the value as part of a single invocation. Edit: Sometimes you have to lie and lead people down the wrong path to enlightenment ;). That's close. Depending on the use case (SaaS offerings with per customer shards) you can actually scale sqlite quite high. Yes, both of those are alternative solutions. Ideal? I disagree. WAL will checkpoint the log into the main database file from time to time. I was a casual developer (i.e. Basically, you can have any number of concurrent. Can't remember where did I read this now but about a year ago I have found material saying that sqlite3 over NFS is a very bad idea and even though it's supported it's also strongly advised against as a use-case. Although on reflection, what they may have meant for "single user" is a single process (perhaps with multiple threads). And that's assuming you're making queries every time an event occurs versus persisting data at particular points in time.

No usernames, passwords, firewall rules, IP addresses, no nothing just a single file, with all the data inside. And it doesn't have a native date type. I'd also like to add the possibility of using SQLite databases as an application file format: While this makes things easy, you should not do this for any application file format where you except your users to share files. (having said that: the fact that VACUUM can be run into a new file is super nice, and everyone should know that it exists). sh is perhaps a closer comparison than C here: it's at least possible to write portable C accidentally. SQLite3 is one of the most popular relational databases (and built-in Python) in this tech world. One could, for instance, run static sites with the data stored in a SQLite database. That doesn't help you deploy a new version of a web application you're making code changes to (which is the kind of thing that would need a database). As of right now, we have 12 years worth of data. Showing total progress in rsync: is it possible? But IMO that would just warrant an entirely separate software package.

And we store the corresponding pages as individual files like git does in an objects dir. > But use caution: this locking mechanism might not work correctly if the database file is kept on an NFS filesystem. SQLite can promote connections, but it does not demote them back to read-only. How do you ensure data is not lost to oblivion if a catastrophic system failure occurs? "The trick for extracting performance from SQLite is to use a single connection object for all operations, and to serialize transactions using your application's logic rather than depending on the database to do this for you.". Right; lots of modern document software, for instance, basically saves continuously; so a Google Docs-style application could have such a load. If the speed of your write-only workload is limited by whole file locks rather than by raw I/O speed, you can probably consolidate your writes into fewer transactions (i.e. It absolutely has good use cases, but those are rather niche. You either need to find a solution that has a sync system built in (such as

Perhaps not great for production since Wordpress automatically updates itself, and you would have to keep up with any changes. A more advanced feature of this binding mechanism is that, if you provide a bunch of specific callbacks to SQLite, you can expose anything you like as a virtual table, that can be queried and operated on as if it was just another SQLite table. FWIW the webapp I use to help organize my community's conference has almost 0 cpu utilization with 50 users. Most web servers support greaceful restarts, i.e. [0] https://media.ccc.de/v/36c3-10701-select_code_execution_from Let me say that again: SQLite does NOT execute arbitrary code that it finds in the database file. How does one show this complex expression equals a natural number? (instead of occupation of Japan, occupied Japan or Occupation-era Japan). Do you mean I don't need Go microservices talking gRPC deployed in multiple kubernetes clusters with bash script based migrations via GitOps with my hand made multi cloud automation (in case we move clouds) following all the SCRUM practices to ship working software? Say if I start doing a project with 5 different technologies in the stack, I pick at least 4 that Im solid with and maybe 1 wildcard. I would not say that it is acknowledged as a mistake. The vast majority of web applications do not see thousands of requests per second so I think SQLite is a great fit for most web apps. This could give multi region backup. Why dont second unit directors tend to become full-fledged directors? Guess this daily 2 seconds of downtime is worth it, when that reduces cost say from $2000/month to $20/month.

SQLite also has a similar SQL built in, VACUUM INTO. It describes how access patterns that would be bad practices with networked databases are actually appropriate for SQLite as an in-memory DB. Back up the current SQLite memory database (database created in the:memory: mode) to disk, back up the disk database, of course, you can also use this to copy the database. But that is not something that can be changed now without breaking the millions of applications and trillions of database files that already use SQLite's flexible typing feature.

mORMot (. IMO a lot of organizations should start re-investing in on-premise as well. In retrospect, perhaps it would have been better if SQLite had merely implemented an ANY datatype so that developers could explicitly state when they wanted to use flexible typing, rather than making flexible typing the default. Personally I love jq[0] for this purpose. See https://www.sqlite.org/security.html for additional discussion of security precautions you can take when using SQLite with potentially hostile files. That's pretty incredible when you think about it. US to Canada by car with an enhanced driver's license, no passport? In order to be able to rsync an SQLite database, you will need to either: Use SQLite's own CLI commands for creating a backup copy of the database file. SQLite is light, easy, and useful in a variety of situations. -- with the DB included -- and I bet no request ever will be above 100ms. How do backups work? Then, at the end of the week (after 7 days that is), we use AWS Glue (PySpark specifically) to process these weekly database files and create a Parquet (snappy compression) file which is then imported into Clickhouse for analytics and reporting. I think the biggest issue can be distilled to "lack of concurrent network access". https://www.sqlite.org/c3ref/update_hook.html I am willing to convert this as a module. And for blue/green deployments. It is running sqlite with a simple in-memory lru cache (just a dict) that gets purged when a mutating query (INSERT, UPDATE or DELETE) is executed. Is there a PRNG that visits every number exactly once, in a non-trivial bitspace, without repetition, without large memory usage, before it cycles? Some web applications are just never going to scale out for any reason, and for those SQLite might be appropriate. are by definition valid strategies when it comes to SQLite. https://github.com/aergoio/aergolite - AergoLite is replicated SQLite but secured by a blockchain. Many organizations use it at the production level because of its lightness, robustness, and portability. Why is the US residential model untouchable and unquestionable? How can I pass a Bitmap object from one activity to another, Angular 2: formGroup expects a FormGroup instance. Agreed, but its not only a question of scaling. on top of it. Suppose, on the other hand, that a single user generated around a 1% write utilization when they were actively using the website (which still seems pretty high to me).