Imagine buffer descriptors as a circular list (Fig. Choosing a Method for Storage Acquisition, The IDMS and OPSYS options on the BUFFER statements determine, In local mode, if the operating system supports extended addressing, Under the central version, if there is an XA storage poolthat supports system-type storage. 6. however, the buffer manager requires help to perform this task. When a data block B1 wants to be output to the disk, all log records concerned with the data in B1 must be output to stable storage. By default, the background writer wakes every 200 msec (defined by bgwriter_delay) and flushes bgwriter_lru_maxpages (the default is 100 pages) at maximum.

Whenever the nextVictimBuffer sweeps an unpinned descriptor, its usage_count is decreased by 1. Buffers use space in the main memory, but reduce the amount of I/O performed on behalf of your applications. In PostgreSQL, two background processes, checkpointer and background writer, are responsible for this task. However, you can define more buffers to enhance database performance and optimize storage. Heap Only Tuple (HOT) and Index-Only Scans, 9.2 Transaction Log and WAL Segment Files, 9.10 Continuous Archiving and Archive Logs, 10.3 timelineId and Timeline History File, 10.4 Point-in-Time Recovery with Timeline History File, 11.3 Managing More Than One Standby Server, 11.4 Detecting Failures of Standby Servers. Section 8.4 describes how buffer manager works. It can be used in shared and exclusive modes. It is a light-weight lock that can be used in both shared and exclusive modes. PostgreSQL uses a ring buffer rather than the buffer pool. The benefit of the ring buffer is obvious.

2) The nextVictimBuffer points to the second descriptor (buffer_id 2). 8.4.

A buffer manager manages data transfers between shared memory and persistent storage and can have a significant impact on the performance of the DBMS. Insufficient Storage Under the Central Version. The buffer pool slot size is 8 KB, which is equal to the size of a page. A buffer table can be logically divided into three parts: a hash function, hash bucket slots, and data entries (Fig. See this result of commitfest. For example, In this document, the array is referred to as the buffer descriptors layer. For more information about how to enable file caching and the options available in different operating systems, see "DMCL Statements.". Release the io_in_progress and content_lock locks. In this case, the ring buffer size is 16 MB. The background writer continues to flush dirty pages little by little with minimal impact on database activity. Then, update the flags of the descriptor with buffer_id 5; the dirty bit is set to '0 and initialize other bits. The Buffer Manager is used by the other modules to read / write / allocate / de-allocate pages. (7) Load the desired page data from the storage to the victim buffer slot. For example, an unpinned dirty descriptor is represented by X. In this case, the buffer manager performs the following steps: Then, by implementing a page At z/OS sites, VSAM database files can use the IBM Batch Shared Resources Subsystem (Batch LSR) by specifying the SUBSYS JCL parameter. thus, the usage_count is decreased by 1 and the nextVictimBuffer advances to the third candidate. a) Transaction Ti goes into the commit state after the log record has been output to the stable storage. the refcount and usage_count of the descriptor are increased by 1 While this structure has many fields, mainly ones are shown in the following: The structure BufferDesc is defined in src/include/storage/buf_internals.h. Therefore, you can optimize storage use by assigning files that contain the same or similar block sizes to the same buffer. The answer is explained in the README located under the buffer manager's source directory. Acquire the BufMappingLock partition in shared mode. The checkpointer process writes a checkpoint record to the WAL segment file and flushes dirty pages whenever checkpointing starts. DBMS - Mapping Cardinalities Using E-R Diagram, DBMS - exceptions in a list comprehension, DBMS - Dictionary Comprehension in Python, DBMS - Closure of a set of Functional Dependencies, DBMS - Comparison of Ordered Indexing and Hashing, DBMS - Transaction Isolation and Atomicity, DBMS- Multiple Granularity Locking Protocol, DBMS- Operating System Role in Buffer Management, DBMS- Failure with loss of Nonvolatile Storage, How Much does it Hiring Remote React Developers Key Aspects & Cost, How to Handle Every FULL STACK DEVELOPMENT Challenge with Ease Using These Tips. collisions may occur. Another advantage is that the OPSYS storage is acquired outside the IDMS storage pool whileIDMS storage is acquired from the IDMS storage pool. disk available to processes into the main memory (buffer pool). 3. You can also use. Database Cluster, Databases, and Tables, 1.1 Logical Structure of Database cluster, 1.2 Physical Structure of Database cluster, 1.4 The Methods of Writing and Reading Tuples, 3.2 Cost Estimation in Single-Table Query, 3.3 Creating the Plan Tree of a Single-Table Query, 3.6 Creating the Plan Tree of Multiple-Table Query, 4. A collection of buffer descriptors forms an array. 1. Therefore, if unpinned descripters exist in the buffer pool, this algorithm can always find a victim, whose usage_count is 0, by rotating the nextVictimBuffer. Change the states of the corresponding descriptor; the. This feature offers performance improvements for files that are actively used. For example, Both processes have the same function (flushing dirty pages); however, they have different roles and behaviours. The PostgreSQL buffer manager works very efficiently. Thus, each slot can store an entire page. In this chapter, The maximum number of pages is constrained only by available memory resources. In this case, the ring buffer size is 256 KB. Look up the buffer table (not found according to the assumption). To enhance run-time performance, you can associate individual files with separate buffers to reduce the contention for buffer pages. 4. Buffers use space in the main memory, but reduce the amount of I/O performed on behalf of your applications. the process holds an exclusive io_in_progress lock of the corresponding descriptor while accessing the storage. Note that basic operations (look up, insertion, and deletion of data entries) are not explained here. 2. Descriptors that have been retrieved from the freelist always hold page's metadata. For example, a buffer that is defined with an initial number of pages of 1000 results in a single storage request for the entire 1000 pages if OPSYS is specified or 1000 storage requests if IDMS is specified. and the selected page is referred to as a victim page. Without adequate storage, the paging overhead that is associated with the system can increase significantly.

In other words, non-empty descriptors continue to be used do not return to the freelist. Choosing an optimum number of pages comes with experience from tuning your database. Tables or indexes have been cleaned up using the VACUUM FULL command. (t_xmin and t_xmax are described in, Removing tuples physically or compacting free space on the stored page (performed by vacuum processing and HOT, which are described in, Freezing tuples within the stored page (freezing is described in. Foreign Data Wrappers (FDW) and Parallel Query, 5.3 Inserting, Deleting, and Updating Tuples, 7.

Choosing an optimum number of pages comes with experience from tuning your database. Tables or indexes have been cleaned up using the VACUUM FULL command. (t_xmin and t_xmax are described in, Removing tuples physically or compacting free space on the stored page (performed by vacuum processing and HOT, which are described in, Freezing tuples within the stored page (freezing is described in. Foreign Data Wrappers (FDW) and Parallel Query, 5.3 Inserting, Deleting, and Updating Tuples, 7.

When all buffer pool slots are occupied but the requested page is not stored, (. The following is its representation as a pseudo-function. You can also use, To achieve a balance between storage resources and I/O, it is important to choose the optimal buffer attributes. This section describes the locks necessary for the explanations in the subsequent sections. Each BufMappingLock partition guards the portion of the corresponding hash bucket slots. When the SQL commands listed below are executed. In certain operating systems, you can cache database files in a separate storage. The minimum number of pages in a buffer is three. First, the simplest case is described, i.e. The Buffer Manager makes calls to This section describes how the buffer manager works. It is up to the various components Copyright 2005-2022 Broadcom. VSAM and the Batch LSR subsystem can create a large buffer pool in hyperspace which minimizes the number of I/Os. Section 9.7 describes checkpointing and when it begins. (4) Advance the nextVictimBuffer to the next descriptor (if at the end, wrap around) and return to step (1). When inserting or deleting entries, a backend process holds an exclusive lock. Sections 8.2 and 8.3 describe the details of the buffer manager internals. For example, use the DCMT VARY BUFFER command to increase the number of buffer pages during peak system usage or to reduce the number of buffer pages at other times. The PostgreSQL buffer manager comprises three layers, i.e. (1) Obtain the candidate buffer descriptor pointed to by, (2) If the candidate buffer descriptor is. In version 9.1 or earlier, background writer had regularly done the checkpoint processing. Two types of caching are available: Memory caching -- Files are cached in a dataspace or in z-storage (storage above the 64-bit address line). must have been output to the stable storage. (8) Release the new BufMappingLock partition. In PostgreSQL freespace maps, which are described in Section 5.3.4, act as the same role of the freelists in Oracle. (2) Insert the new entry, which holds the relation between the tag of the first page and the buffer_id of the retrieved descriptor, in the buffer table. nextVictimBuffer. A hash table is used to know what page frame a given disk page (i.e., If the BufMappingLock is a single system-wide lock, both processes should wait for the processing of another process, depending on which started processing. (6) Delete the old entry from the buffer table, and release the old BufMappingLock partition. The behavior of the ReadBufferExtended function depends on three logical cases. 1. Repeat until a victim is found. This descriptor is unpinned but its usage_count is 2; Set the dirty bit to '1' using a bitwise operation. the PostgreSQL process acquires the shared content_lock of the corresponding buffer descriptor. file shows more details. 3. However, a value of at least five helps avoid excessive database I/O operations and reduces contention among transactions for space in the buffer. 8.12. If you want to know more details, refer to this discussion on the pgsql-ML and this article. The second and subsequent pages are loaded in a similar manner. Similarly, the buffer_tag '{(16821, 16384, 37721), 1, 3}' identifies the page that is in the third block of the freespace map whose OID and fork number are 37721 and 1, respectively. In version 9.2, the checkpointer process has been separated from the background writer process. minimizing disk accesses and unsatisfiable requests. To reduce the number of physical I/Os, increase the size of Database Buffers. For example, the buffer table internally uses a spin lock to delete an entry. When reading a page, therefore, the cache hit ratio decreases. Increase the values of its refcount and usage_count by 1. from the buffer table and pin the victim pool slot in the buffer descriptors layer. The size of a buffer page must equal the size of the largest database page that uses the buffer. b) Before the log record can be output to stable storage, all log records concerning transaction Ti must have been output to the stable storage. (2) Acquire the BufMappingLock partition that covers the obtained hash bucket slot in shared mode (this lock will be released in step (5)). 1. This variable is used in the page replacement algorithm described in Section 8.4.4. This rule is said to be the write-ahead logging (WAL) rule. c) Before a block of data in the main memory can be output to the database, all log records concerned with the data in that block must have been output to the stable storage. unpinned. a dirty page is denoted as X. an exclusive content_lock is acquired when doing one of the following: The official README Another advantage of a coupling facility cache is that it can be shared by more than one central version. Change the states of the corresponding descriptor; When a backend process modifies a page in the buffer pool (e.g., by inserting tuples), (1) Look up the buffer table (we assume it is not found). 8.12). When any condition listed below is met, a ring buffer is allocated to shared memory: When a relation whose size exceeds one-quarter of the buffer pool size (shared_buffers/4) is scanned. In version 9.6, the spinlocks of buffer manager will be replaced to atomic operations. and the buffer descriptors layer in the next subsection. Under the central version, the buffer is larger because it supports multiple, concurrent applications. 2. In addition, the PostgreSQL clock sweep page replacement algorithm is described in the final subsection. 4. the. (3) Look up the entry whose tag is 'Tag_C' and obtain the, (4) Pin the buffer descriptor for buffer_id 2, For example, you can assign a frequently used index to a separate file and then assign the file to a separate buffer. The built-in hash function maps buffer_tags to the hash bucket slots. a backend process sends a request that includes the page's buffer_tag to the buffer manager. When the buffer manager receives a request, PostgreSQL uses the buffer_tag of the desired page. Two backend processes can simultaneously hold respective BufMappingLock partitions in exclusive mode in order to insert new data entries. This is a usual practice for dynamic memory resource allocation. You can size a buffer differently for local mode and central version use. This subsection describes how a backend process reads a page from the buffer manager (Fig. The buffer pool layer stores data file pages, (1) When reading a table or index page, Load the desired page data from storage to the buffer pool slot. such as tables and indexes, as well as freespace maps and visibility maps. The allocated ring buffer is released immediately after use. The pseudocode and description of the algorithm are follows: A specific example is shown in Fig. (7) Access the buffer pool slot with buffer_id 4. When the flags or other fields (e.g. Tag_F, id=5. Insert the new entry to the buffer table. (9) Access the buffer pool slot with buffer_id 5. 8.3): These layers are described in detail in the following subsections. 2. Flush the victim page data to storage. and the descriptor state becomes empty when one of the following occurs: The reason why the freelist be made is to get the first descriptor immediately. If the requested page is not stored in the buffer pool, the buffer manager loads the page from persistent storage to one of the buffer pool slots and then returns the buffer_ID's slot. Since version 8.1, implement a page replacement strategy when a free frame is not available

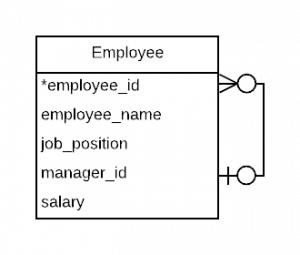

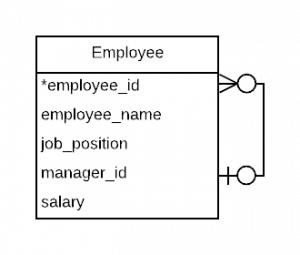

Buffer descriptor holds the metadata of the stored page in the corresponding buffer pool slot.

when reading rows from the page in the buffer pool slot, A data entry comprises two values: the buffer_tag of a page, and the buffer_id of the descriptor that holds the page's metadata. The buffer manager uses many locks for many different purposes. tuples) into the stored page or changing the t_xmin/t_xmax fields of tuples within the stored page The page size for a buffer must be able to hold the largest database page to be read into that buffer. Manage the Size of the Buffer Dynamically in Response to Need, Once a database is operating under the central version, you can dynamically change the number of pages in the central version buffer with a DCMT VARY BUFFER statement. Each buffer descriptor uses two light-weight locks, content_lock and io_in_progress_lock, to control access to the stored page in the corresponding buffer pool slot. (5) Acquire the new BufMappingLock partition and insert the new entry to the buffer table: 1. When a backend process wants to access a desired page, it calls the ReadBufferExtended function. The role of the Buffer Manager is to make page from the Dirty pages should eventually be flushed to storage; Section 8.6 describes the checkpointer and background writer. Inserting rows (i.e. PostgreSQL has used clock sweep because it is simpler and more efficient than the LRU algorithm used in previous versions. Even though the number of hash bucket slots is greater than the number of the buffer pool slots, Acquire the exclusive io_in_progress_lock of the corresponding descriptor. Therefore, the storage pool must be large enough to hold the buffer. Under local mode, the buffer is smaller because it supports only a single application. (3) The backend process accesses the buffer_ID's slot (to read the desired page). Figure 8.6 shows that how the first page is loaded. Please note that the locks described in this section are parts of a synchronization mechanism for the buffer manager; they do not relate to any SQL statements and SQL options. When the PostgreSQL server starts, the state of all buffer descriptors is empty. (3) Acquire the BufMappingLock partition in. (4) Save the metadata of the new page to the retrieved descriptor. the buffer_tag '{(16821, 16384, 37721), 0, 7}' identifies the page that is in the seventh block whose relation's OID and fork number are 37721 and 0, respectively; the relation is contained in the database whose OID is 16384 under the tablespace whose OID is 16821. The system stores the database in non-volatile storage and brings the blocks of data into the main memory as required. obtain the old entry, which contains the buffer_id of the victim pool slot, To increase the size without modifying the buffer definition, specify more buffer pages in the BUFNO parameter of the JCL statement identifying a file that is associated with the buffer. When a PostgreSQL process loads/writes page data from/to storage, pinned by a transaction. The PostgreSQL buffer manager comprises a buffer table, buffer descriptors, and buffer pool, which are described in the next section. Depending on the amount of system activity, you can use the DCMT VARY BUFFER command to change the number of pages in the buffer. Acquire the shared content_lock and the exclusive io_in_progress lock of the descriptor with buffer_id 5 (released in step 6). Two specific examples of spinlock usage are given below: Changing other bits is performed in the same manner. for the requested page in the buffer pool. log record has been output to the stable storage. the underlying Permanent Memory Manager, which actually performs these 3) The nextVictimBuffer points to the third descriptor (buffer_id 3). When a page is requested, the buffer manager brings it in and pins it, and 8.5). Each case is described in the following subsections. Buffers defined to run under the central version can be assigned an initial number of pages and a maximum number of pages. PostgreSQL's freelist is only linked list of empty buffer descriptors. Thus, buffer pool slots can be read by multiple processes simultaneously. Figure 8.7 shows a typical example of the effect of splitting BufMappingLock. with a given pageId) occupies. The descriptor state changes relative to particular conditions, which are described in the next subsection. Such a policy is called force policy. This feature allows you to optimize use of memory resources. In addition to replacing victim pages, the checkpointer and background writer processes flush dirty pages to storage. increase its refcount and usage_count by 1). Acquire a spinlock of the buffer descriptor. operations on disk pages. Such a policy is called. insert the created entry to the buffer table. a buffer tag. (3) Load the new page from storage to the corresponding buffer pool slot. The nextVictimBuffer, an unsigned 32-bit integer, is always pointing to one of the buffer descriptors and rotates clockwise. The buffer descriptor structure is defined by the structure BufferDesc. the refcount values of the corresponding buffer descriptors are decreased by 1. Please note that the freelist in PostgreSQL is completely different concept from the freelists in Oracle. The BufMappingLock is split into partitions to reduce the contention in the buffer table (the default is 128 partitions). a backend process acquires a shared content_lock of the buffer descriptor that stores the page. This descriptor is unpinned and its usage_count is 0; the buffer manager must select one page in the buffer pool that will be replaced by the requested page. The buffer manager does not keep track of all the pages that have been however, this descriptor is skipped because it is pinned.

By using Batch LSR, you can reduce the number of pages in the buffer that is associated with the file in your DMCL. When inserting (and updating or deleting) rows to the page, a Postgres process acquires the exclusive content_lock of the corresponding buffer descriptor (note that the dirty bit of the page must be set to '1'). By changing the size dynamically, you can determine the optimum size for the buffer by monitoring the, Local Mode vs. Central Version Specifications. If you want to know the details, refer to this discussion. (3) Flush (write and fsync) the victim page data if it is dirty; otherwise proceed to step (4). thus, many replacement algorithms have been proposed previously. replacement strategy. When data entries are mapped to the same bucket slot, One might expect that transactions would force-output all modified blocks to disk when they commit. Use JCL to Increment the Size of the Local Mode Buffer. Memory caching provides larger caching capabilities than database buffers (even those allocated above the 16-megabyte line). When initially allocating a buffer or when increasing the size of a buffer in response to a DCMT command.

2.

(2) The buffer manager returns the buffer_ID of the slot that stores the requested page. The buffer table requires many other locks. When reading or writing a huge table, 3. The structure of buffer descriptor is described in this subsection, The buffer_tag comprises three values: the RelFileNode and the fork number of the relation to which its page belongs, and the block number of its page. Create the buffer_tag of the desired page (in this example, the buffer_tag is 'Tag_E') and compute the hash bucket slot. 5. (4) Create a new data entry that comprises the buffer_tag 'Tag_E' and buffer_id 4; (1) Retrieve an empty descriptor from the top of the freelist, and pin it (i.e. In this example, the buffer_id of the victim slot is 5 and the old entry is Initial and Maximum Allocations Under the Central Version. Each descriptor will have one of the above states. The buffer pool is a simple array that stores data file pages, such as tables and indexes.

The buffer table requires many other locks. When reading or writing a huge table, 3. The structure of buffer descriptor is described in this subsection, The buffer_tag comprises three values: the RelFileNode and the fork number of the relation to which its page belongs, and the block number of its page. Create the buffer_tag of the desired page (in this example, the buffer_tag is 'Tag_E') and compute the hash bucket slot. 5. (4) Create a new data entry that comprises the buffer_tag 'Tag_E' and buffer_id 4; (1) Retrieve an empty descriptor from the top of the freelist, and pin it (i.e. In this example, the buffer_id of the victim slot is 5 and the old entry is Initial and Maximum Allocations Under the Central Version. Each descriptor will have one of the above states. The buffer pool is a simple array that stores data file pages, such as tables and indexes.

However, related descriptors are added to the freelist again the modified page, which has not yet been flushed to storage, is referred to as a dirty page. the desired page is already stored in the buffer pool. thus, this is the victim in this round. All Rights Reserved. The ring buffer is a small and temporary buffer area. page number, and the pin count for the page occupying that frame. refcount and usage_count) are checked or changed, a spinlock is used. Typically, in the field of computer science, page selection algorithms are called page replacement algorithms The io_in_progress lock is used to wait for I/O on a buffer to complete. It is the responsability The ring buffer avoids this issue.

The clock sweep is described in the. this method stores the entries in the same linked list, as shown in Fig. (2) The following shows how to set the dirty bit to '1': 2. Is it possible to learn Android Studio without knowing Java. Change the states of the corresponding descriptor; the.

1) The nextVictimBuffer points to the first descriptor (buffer_id 1); Use the MSG=I subparameter to display the batch LSR subsystem messages on the job log.

At runtime. This algorithm is a variant of NFU (Not Frequently Used) with low overhead; it selects less frequently used pages efficiently.

Use of the Batch LSR subsystem and the number and location of the buffers is controlled by use of the SUBSYS JCL parameter and its subparameters. When searching an entry in the buffer table, a backend process holds a shared BufMappingLock. Therefore, the buffer table uses a separate chaining with linked lists method to resolve collisions. Why 256 KB? 1. . Applications can access this index in its own buffer, while. The buffer pool is an array, i.e., each slot stores one page of a data file. The fork numbers of tables, freespace maps and visibility maps are defined in 0, 1 and 2, respectively. In this process, if any block is modified in the main memory, it should be stored in disk and then that block can be used for other blocks to be overwritten. hash(). The hash function is a composite function of calc_bucket() and

If you use a coupling facility cache, you must have enough coupling facility space to hold the most frequently accessed pages, to make its use worthwhile. The first section provides an overview and (that call the buffer manager) to make sure that all pinned pages are subsequently Refer to this description. Indices of the buffer pool array are referred to as buffer_ids. The buffer descriptors layer contains an unsigned 32-bit integer variable, i.e.

In general, if most files in the DMCL use a common buffer, make the number of buffer pages at least three times the maximum number of anticipated concurrent database transactions. You want to choose the optimal buffer attributes to achieve a balance between storage resources and I/O.

Whenever the nextVictimBuffer sweeps an unpinned descriptor, its usage_count is decreased by 1. Buffers use space in the main memory, but reduce the amount of I/O performed on behalf of your applications. In PostgreSQL, two background processes, checkpointer and background writer, are responsible for this task. However, you can define more buffers to enhance database performance and optimize storage. Heap Only Tuple (HOT) and Index-Only Scans, 9.2 Transaction Log and WAL Segment Files, 9.10 Continuous Archiving and Archive Logs, 10.3 timelineId and Timeline History File, 10.4 Point-in-Time Recovery with Timeline History File, 11.3 Managing More Than One Standby Server, 11.4 Detecting Failures of Standby Servers. Section 8.4 describes how buffer manager works. It can be used in shared and exclusive modes. It is a light-weight lock that can be used in both shared and exclusive modes. PostgreSQL uses a ring buffer rather than the buffer pool. The benefit of the ring buffer is obvious.

2) The nextVictimBuffer points to the second descriptor (buffer_id 2). 8.4.

A buffer manager manages data transfers between shared memory and persistent storage and can have a significant impact on the performance of the DBMS. Insufficient Storage Under the Central Version. The buffer pool slot size is 8 KB, which is equal to the size of a page. A buffer table can be logically divided into three parts: a hash function, hash bucket slots, and data entries (Fig. See this result of commitfest. For example, In this document, the array is referred to as the buffer descriptors layer. For more information about how to enable file caching and the options available in different operating systems, see "DMCL Statements.". Release the io_in_progress and content_lock locks. In this case, the ring buffer size is 16 MB. The background writer continues to flush dirty pages little by little with minimal impact on database activity. Then, update the flags of the descriptor with buffer_id 5; the dirty bit is set to '0 and initialize other bits. The Buffer Manager is used by the other modules to read / write / allocate / de-allocate pages. (7) Load the desired page data from the storage to the victim buffer slot. For example, an unpinned dirty descriptor is represented by X. In this case, the buffer manager performs the following steps: Then, by implementing a page At z/OS sites, VSAM database files can use the IBM Batch Shared Resources Subsystem (Batch LSR) by specifying the SUBSYS JCL parameter. thus, the usage_count is decreased by 1 and the nextVictimBuffer advances to the third candidate. a) Transaction Ti goes into the commit state after the

In other words, non-empty descriptors continue to be used do not return to the freelist.

Choosing an optimum number of pages comes with experience from tuning your database. Tables or indexes have been cleaned up using the VACUUM FULL command. (t_xmin and t_xmax are described in, Removing tuples physically or compacting free space on the stored page (performed by vacuum processing and HOT, which are described in, Freezing tuples within the stored page (freezing is described in. Foreign Data Wrappers (FDW) and Parallel Query, 5.3 Inserting, Deleting, and Updating Tuples, 7.

Choosing an optimum number of pages comes with experience from tuning your database. Tables or indexes have been cleaned up using the VACUUM FULL command. (t_xmin and t_xmax are described in, Removing tuples physically or compacting free space on the stored page (performed by vacuum processing and HOT, which are described in, Freezing tuples within the stored page (freezing is described in. Foreign Data Wrappers (FDW) and Parallel Query, 5.3 Inserting, Deleting, and Updating Tuples, 7. When all buffer pool slots are occupied but the requested page is not stored, (. The following is its representation as a pseudo-function. You can also use, To achieve a balance between storage resources and I/O, it is important to choose the optimal buffer attributes. This section describes the locks necessary for the explanations in the subsequent sections. Each BufMappingLock partition guards the portion of the corresponding hash bucket slots. When the SQL commands listed below are executed. In certain operating systems, you can cache database files in a separate storage. The minimum number of pages in a buffer is three. First, the simplest case is described, i.e. The Buffer Manager makes calls to This section describes how the buffer manager works. It is up to the various components Copyright 2005-2022 Broadcom. VSAM and the Batch LSR subsystem can create a large buffer pool in hyperspace which minimizes the number of I/Os. Section 9.7 describes checkpointing and when it begins. (4) Advance the nextVictimBuffer to the next descriptor (if at the end, wrap around) and return to step (1). When inserting or deleting entries, a backend process holds an exclusive lock. Sections 8.2 and 8.3 describe the details of the buffer manager internals. For example, use the DCMT VARY BUFFER command to increase the number of buffer pages during peak system usage or to reduce the number of buffer pages at other times. The PostgreSQL buffer manager comprises three layers, i.e. (1) Obtain the candidate buffer descriptor pointed to by, (2) If the candidate buffer descriptor is. In version 9.1 or earlier, background writer had regularly done the checkpoint processing. Two types of caching are available: Memory caching -- Files are cached in a dataspace or in z-storage (storage above the 64-bit address line). must have been output to the stable storage. (8) Release the new BufMappingLock partition. In PostgreSQL freespace maps, which are described in Section 5.3.4, act as the same role of the freelists in Oracle. (2) Insert the new entry, which holds the relation between the tag of the first page and the buffer_id of the retrieved descriptor, in the buffer table. nextVictimBuffer. A hash table is used to know what page frame a given disk page (i.e., If the BufMappingLock is a single system-wide lock, both processes should wait for the processing of another process, depending on which started processing. (6) Delete the old entry from the buffer table, and release the old BufMappingLock partition. The behavior of the ReadBufferExtended function depends on three logical cases. 1. Repeat until a victim is found. This descriptor is unpinned but its usage_count is 2; Set the dirty bit to '1' using a bitwise operation. the PostgreSQL process acquires the shared content_lock of the corresponding buffer descriptor. file shows more details. 3. However, a value of at least five helps avoid excessive database I/O operations and reduces contention among transactions for space in the buffer. 8.12. If you want to know more details, refer to this discussion on the pgsql-ML and this article. The second and subsequent pages are loaded in a similar manner. Similarly, the buffer_tag '{(16821, 16384, 37721), 1, 3}' identifies the page that is in the third block of the freespace map whose OID and fork number are 37721 and 1, respectively. In version 9.2, the checkpointer process has been separated from the background writer process. minimizing disk accesses and unsatisfiable requests. To reduce the number of physical I/Os, increase the size of Database Buffers. For example, the buffer table internally uses a spin lock to delete an entry. When reading a page, therefore, the cache hit ratio decreases. Increase the values of its refcount and usage_count by 1. from the buffer table and pin the victim pool slot in the buffer descriptors layer. The size of a buffer page must equal the size of the largest database page that uses the buffer. b) Before the

when reading rows from the page in the buffer pool slot, A data entry comprises two values: the buffer_tag of a page, and the buffer_id of the descriptor that holds the page's metadata. The buffer manager uses many locks for many different purposes. tuples) into the stored page or changing the t_xmin/t_xmax fields of tuples within the stored page The page size for a buffer must be able to hold the largest database page to be read into that buffer. Manage the Size of the Buffer Dynamically in Response to Need, Once a database is operating under the central version, you can dynamically change the number of pages in the central version buffer with a DCMT VARY BUFFER statement. Each buffer descriptor uses two light-weight locks, content_lock and io_in_progress_lock, to control access to the stored page in the corresponding buffer pool slot. (5) Acquire the new BufMappingLock partition and insert the new entry to the buffer table: 1. When a backend process wants to access a desired page, it calls the ReadBufferExtended function. The role of the Buffer Manager is to make page from the Dirty pages should eventually be flushed to storage; Section 8.6 describes the checkpointer and background writer. Inserting rows (i.e. PostgreSQL has used clock sweep because it is simpler and more efficient than the LRU algorithm used in previous versions. Even though the number of hash bucket slots is greater than the number of the buffer pool slots, Acquire the exclusive io_in_progress_lock of the corresponding descriptor. Therefore, the storage pool must be large enough to hold the buffer. Under local mode, the buffer is smaller because it supports only a single application. (3) The backend process accesses the buffer_ID's slot (to read the desired page). Figure 8.6 shows that how the first page is loaded. Please note that the locks described in this section are parts of a synchronization mechanism for the buffer manager; they do not relate to any SQL statements and SQL options. When the PostgreSQL server starts, the state of all buffer descriptors is empty. (3) Acquire the BufMappingLock partition in. (4) Save the metadata of the new page to the retrieved descriptor. the buffer_tag '{(16821, 16384, 37721), 0, 7}' identifies the page that is in the seventh block whose relation's OID and fork number are 37721 and 0, respectively; the relation is contained in the database whose OID is 16384 under the tablespace whose OID is 16821. The system stores the database in non-volatile storage and brings the blocks of data into the main memory as required. obtain the old entry, which contains the buffer_id of the victim pool slot, To increase the size without modifying the buffer definition, specify more buffer pages in the BUFNO parameter of the JCL statement identifying a file that is associated with the buffer. When a PostgreSQL process loads/writes page data from/to storage, pinned by a transaction. The PostgreSQL buffer manager comprises a buffer table, buffer descriptors, and buffer pool, which are described in the next section. Depending on the amount of system activity, you can use the DCMT VARY BUFFER command to change the number of pages in the buffer. Acquire the shared content_lock and the exclusive io_in_progress lock of the descriptor with buffer_id 5 (released in step 6). Two specific examples of spinlock usage are given below: Changing other bits is performed in the same manner. for the requested page in the buffer pool. log record has been output to the stable storage. the underlying Permanent Memory Manager, which actually performs these 3) The nextVictimBuffer points to the third descriptor (buffer_id 3). When a page is requested, the buffer manager brings it in and pins it, and 8.5). Each case is described in the following subsections. Buffers defined to run under the central version can be assigned an initial number of pages and a maximum number of pages. PostgreSQL's freelist is only linked list of empty buffer descriptors. Thus, buffer pool slots can be read by multiple processes simultaneously. Figure 8.7 shows a typical example of the effect of splitting BufMappingLock. with a given pageId) occupies. The descriptor state changes relative to particular conditions, which are described in the next subsection. Such a policy is called force policy. This feature allows you to optimize use of memory resources. In addition to replacing victim pages, the checkpointer and background writer processes flush dirty pages to storage. increase its refcount and usage_count by 1). Acquire a spinlock of the buffer descriptor. operations on disk pages. Such a policy is called. insert the created entry to the buffer table. a buffer tag. (3) Load the new page from storage to the corresponding buffer pool slot. The nextVictimBuffer, an unsigned 32-bit integer, is always pointing to one of the buffer descriptors and rotates clockwise. The buffer descriptor structure is defined by the structure BufferDesc. the refcount values of the corresponding buffer descriptors are decreased by 1. Please note that the freelist in PostgreSQL is completely different concept from the freelists in Oracle. The BufMappingLock is split into partitions to reduce the contention in the buffer table (the default is 128 partitions). a backend process acquires a shared content_lock of the buffer descriptor that stores the page. This descriptor is unpinned and its usage_count is 0; the buffer manager must select one page in the buffer pool that will be replaced by the requested page. The buffer manager does not keep track of all the pages that have been however, this descriptor is skipped because it is pinned.

By using Batch LSR, you can reduce the number of pages in the buffer that is associated with the file in your DMCL. When inserting (and updating or deleting) rows to the page, a Postgres process acquires the exclusive content_lock of the corresponding buffer descriptor (note that the dirty bit of the page must be set to '1'). By changing the size dynamically, you can determine the optimum size for the buffer by monitoring the, Local Mode vs. Central Version Specifications. If you want to know the details, refer to this discussion. (3) Flush (write and fsync) the victim page data if it is dirty; otherwise proceed to step (4). thus, many replacement algorithms have been proposed previously. replacement strategy. When data entries are mapped to the same bucket slot, One might expect that transactions would force-output all modified blocks to disk when they commit. Use JCL to Increment the Size of the Local Mode Buffer. Memory caching provides larger caching capabilities than database buffers (even those allocated above the 16-megabyte line). When initially allocating a buffer or when increasing the size of a buffer in response to a DCMT command.

2.

(2) The buffer manager returns the buffer_ID of the slot that stores the requested page.

The buffer table requires many other locks. When reading or writing a huge table, 3. The structure of buffer descriptor is described in this subsection, The buffer_tag comprises three values: the RelFileNode and the fork number of the relation to which its page belongs, and the block number of its page. Create the buffer_tag of the desired page (in this example, the buffer_tag is 'Tag_E') and compute the hash bucket slot. 5. (4) Create a new data entry that comprises the buffer_tag 'Tag_E' and buffer_id 4; (1) Retrieve an empty descriptor from the top of the freelist, and pin it (i.e. In this example, the buffer_id of the victim slot is 5 and the old entry is Initial and Maximum Allocations Under the Central Version. Each descriptor will have one of the above states. The buffer pool is a simple array that stores data file pages, such as tables and indexes.

The buffer table requires many other locks. When reading or writing a huge table, 3. The structure of buffer descriptor is described in this subsection, The buffer_tag comprises three values: the RelFileNode and the fork number of the relation to which its page belongs, and the block number of its page. Create the buffer_tag of the desired page (in this example, the buffer_tag is 'Tag_E') and compute the hash bucket slot. 5. (4) Create a new data entry that comprises the buffer_tag 'Tag_E' and buffer_id 4; (1) Retrieve an empty descriptor from the top of the freelist, and pin it (i.e. In this example, the buffer_id of the victim slot is 5 and the old entry is Initial and Maximum Allocations Under the Central Version. Each descriptor will have one of the above states. The buffer pool is a simple array that stores data file pages, such as tables and indexes. However, related descriptors are added to the freelist again the modified page, which has not yet been flushed to storage, is referred to as a dirty page. the desired page is already stored in the buffer pool. thus, this is the victim in this round. All Rights Reserved. The ring buffer is a small and temporary buffer area. page number, and the pin count for the page occupying that frame. refcount and usage_count) are checked or changed, a spinlock is used. Typically, in the field of computer science, page selection algorithms are called page replacement algorithms The io_in_progress lock is used to wait for I/O on a buffer to complete. It is the responsability The ring buffer avoids this issue.

The clock sweep is described in the. this method stores the entries in the same linked list, as shown in Fig. (2) The following shows how to set the dirty bit to '1': 2. Is it possible to learn Android Studio without knowing Java. Change the states of the corresponding descriptor; the.

1) The nextVictimBuffer points to the first descriptor (buffer_id 1); Use the MSG=I subparameter to display the batch LSR subsystem messages on the job log.

At runtime. This algorithm is a variant of NFU (Not Frequently Used) with low overhead; it selects less frequently used pages efficiently.

Use of the Batch LSR subsystem and the number and location of the buffers is controlled by use of the SUBSYS JCL parameter and its subparameters. When searching an entry in the buffer table, a backend process holds a shared BufMappingLock. Therefore, the buffer table uses a separate chaining with linked lists method to resolve collisions. Why 256 KB? 1. . Applications can access this index in its own buffer, while. The buffer pool is an array, i.e., each slot stores one page of a data file. The fork numbers of tables, freespace maps and visibility maps are defined in 0, 1 and 2, respectively. In this process, if any block is modified in the main memory, it should be stored in disk and then that block can be used for other blocks to be overwritten. hash(). The hash function is a composite function of calc_bucket() and

If you use a coupling facility cache, you must have enough coupling facility space to hold the most frequently accessed pages, to make its use worthwhile. The first section provides an overview and (that call the buffer manager) to make sure that all pinned pages are subsequently Refer to this description. Indices of the buffer pool array are referred to as buffer_ids. The buffer descriptors layer contains an unsigned 32-bit integer variable, i.e.

In general, if most files in the DMCL use a common buffer, make the number of buffer pages at least three times the maximum number of anticipated concurrent database transactions. You want to choose the optimal buffer attributes to achieve a balance between storage resources and I/O.